Unlocking New Possibilities: How Fine-Tuning GPT-4o-mini Transforms Business Applications in AI

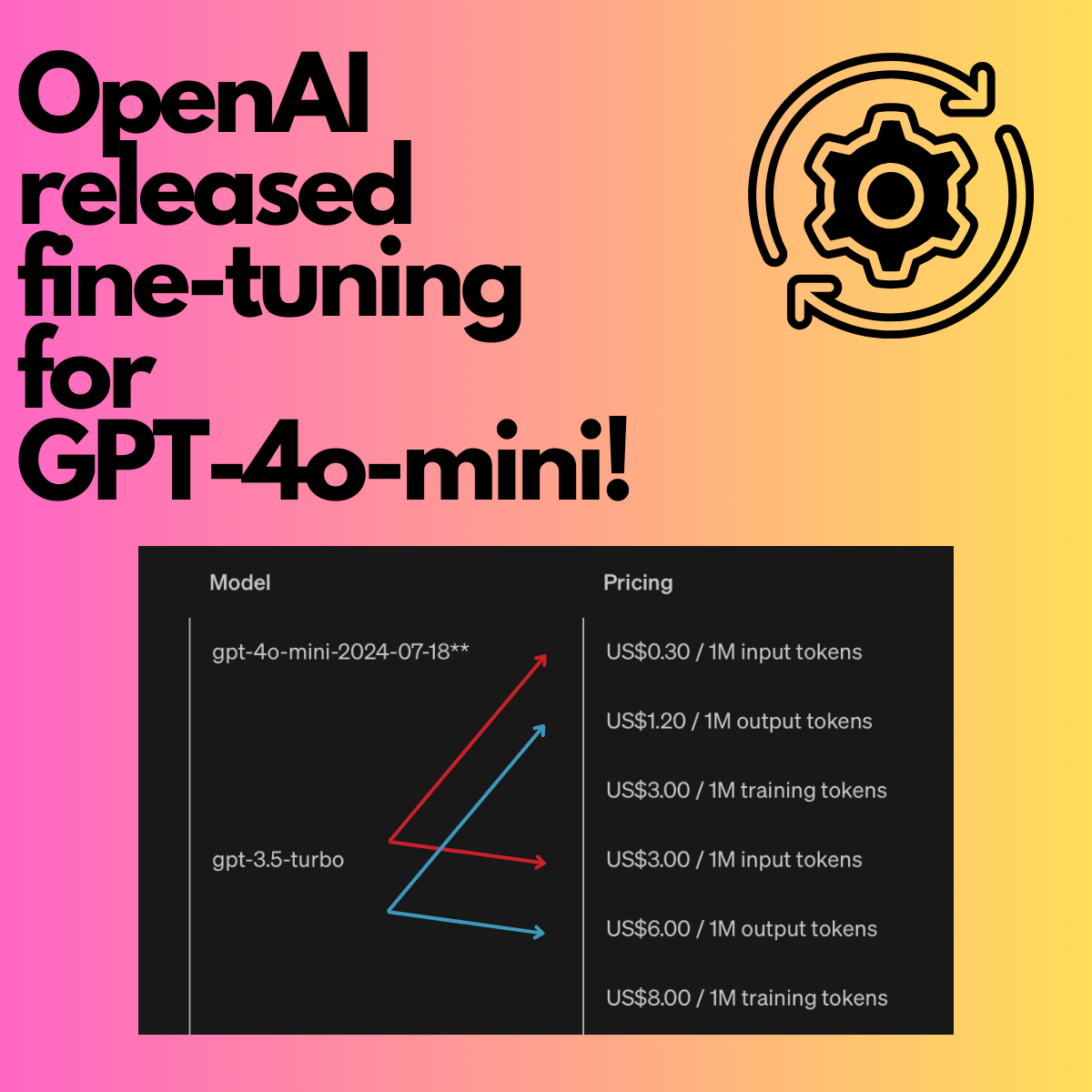

Wow, OpenAI just released fine-tuning for GPT-4o-mini! This is huge for anyone exploring LLMs in business; here is why.

First, let’s clarify what fine-tuning means.

Initially, GPT models undergo extensive training on a diverse corpus, including books, articles, websites, and other text forms. This stage aims to develop a broad understanding of language, context, and world knowledge.

This is what made GPT so good in the first place.

So, what if GPT still needs to be improved for your use case? You fine-tune.

Fine-tuning in the context of OpenAI’s GPT models refers to taking a pre-trained model, like GPT-3 or GPT-4, and further training it on a specific dataset to specialize its capabilities towards particular tasks or knowledge domains.

Here is an example from the job board industry. If I use GPT to classify jobs in categories, I can provide a dataset of prompts (instructions + job descriptions) and the expected results.

You fine-tune by providing examples in a JSONL format, like this:

This data is then used to fine-tune GPT and provide me with my fine-tuned API endpoint.

Fine-tuning is so good that with enough examples (200+), you can get an even better performance with GPT-3.5 from doing zero-shot with GPT-4 at a significantly lower cost (4-6 times lower).

How does GPT-4o-mini change the current business landscape of fine-tuning LLMs?

GPT-4o mini is a new, cost-efficient AI model from OpenAI designed to enhance accessibility and reduce costs for developers. This smaller version of the GPT-4o excels in various tasks, including textual intelligence, multimodal reasoning, and coding, outperforming older models like GPT-3.5 Turbo. It initially supports text and vision inputs, with plans to expand to other modalities. GPT-4o mini incorporates advanced safety measures, costs significantly less than previous models, and is available through multiple APIs, making it ideal for various applications, from customer support to data extraction.

This new model is very cost-efficient but still intelligent and fast. It is excellent to make multiple short calls instead of one long prompt. It also has a larger context (128K tokens) and an improved tokenizer that improves non-English performance.

It is a great, cheap model but has limited use cases compared to a fine-tuned GPT-3.5.

But now, you can also fine-tune GPT-4o-mini, and I am very excited about this!

GPT-4o mini is 90% cheaper on input tokens and 80% cheaper on output tokens than GPT-3.5 Turbo. GPT-4o mini is also 2x more affordable to train than GPT-3.5 Turbo.

GPT-4o mini has 4x more extended training context (65k tokens) and 8x longer inference context (128k tokens) than GPT-3.5 Turbo.

What is my game plan for the next two weeks?

I will focus on three things:

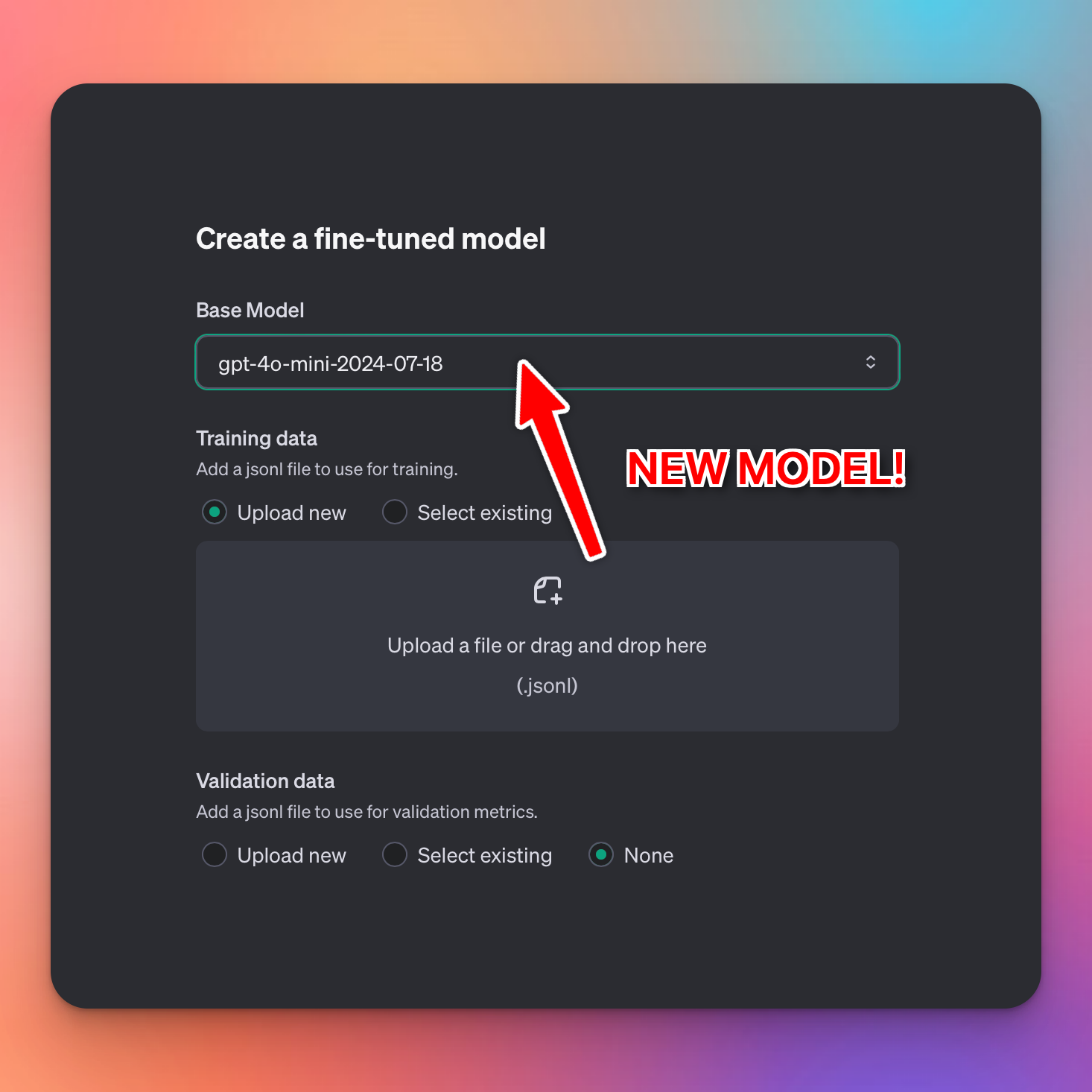

Migrating existing GPT-3.5 fine-tuned models

I'm migrating all my fine-tuned GPT-3.5 models to GPT-4o-mini. Training is free for the next two months, and I already have the training data. Migrating models is just a few clicks, as long as you still have the training data with you (always keep fine-tuned training data).

Test all my GPT-4 and GPT-4o processes on GPT-4o-mini.

Depending on the performance, I can either migrate straight to GPT-4o-mini or fine-tune with all collected training data that the higher-level models already generated for me.

Reevaluate use cases for international job boards.

So, for non-English languages, even fine-tuned GPT-3.5 did not work. The updated tokenizer and the opportunity to fine-tune a more intelligent model than 3.5 are enormous enablers for internationalization. I expect to see some new use cases here.

Conclusion: Impact of GPT-4o-mini fine-tuning on business

In the grand scheme of things, this strategy could be more sustainable. GPT-4o has already significantly reduced the cost compared to GPT-4. An even better, new model that can be fine-tuned has reduced the cost of usage even more and made using the expensive, revenue-bringing models less attractive.

I know what OpenAI will say—it’s designed to expand the accessibility of AI applications by being significantly cheaper and more efficient, which aligns with OpenAI’s goal of broadening the reach and utility of artificial intelligence.

However, the question remains: if you constantly push pricing down and subvention usage with VC money, how will you create a long-term profitable business model?